The Blockchain Debate Podcast

The Blockchain Debate Podcast

Motion: ZK rollup has a better set of security/scalability tradeoff than optimistic rollup (Alex Gluchowski vs. John Adler, co-host: James Prestwich)

Guests:

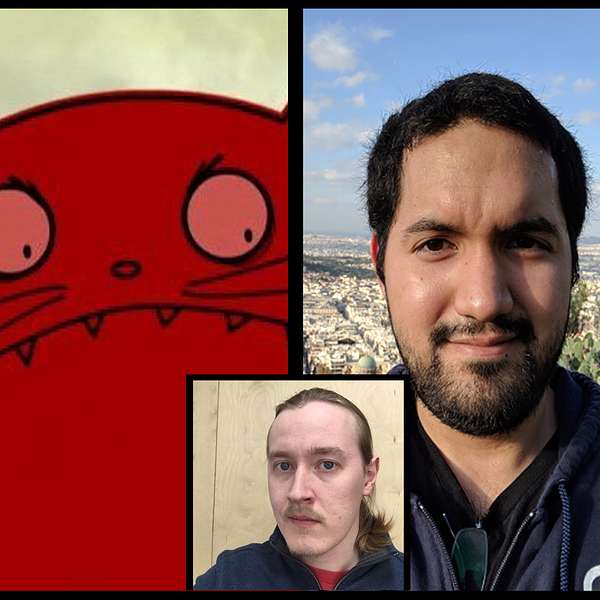

Alex Gluchowski (@gluk64)

John Adler (@jadler0)

Host:

Richard Yan (@gentso09)

James Prestwich (@_prestwich, special co-host)

Today’s motion is “ZK rollup has a better set of security/scalability tradeoff than Optimistic rollup.”

Rollups are a class of layer-2 Ethereum scalability solutions. They allow an off-chain aggregation of transactions inside a smart contract. Users can transact inside the contract with security guarantees, and they will settle to the mainchain at some future point.

ZK and optimistic rollups are different in the way they ensure the validity of these transactions that are being kept off-chain.

The ZK approach uses math. It bundles the transactions, compresses them, and adds a zero-knowledge proof that indicates the validity of the state transitions. When the transaction is sent to the mainchain, the block is verified by the attached zero-knowledge proof.

The optimistic approach uses economic incentives. An operator publishes a state root that isn’t constantly checked by the rollup smart contract. Instead, everybody hopes that the state transition is correct. However, other operators or users can challenge the validity of the transactions, revert the incorrect block, and slash malicious operators.

We compared the two approaches from the standpoint of security, usability, capital efficiency of exits and more.

Today’s debaters are John Adler and Alex Gluchowski. John is the proposer of the original construction of the optimistic rollup and cofounded Celestia, and Alex is implementing a ZK rollup at Matter Labs. Our co-host James Prestwich is a security consultant and auditor for solidity contracts, among many other things.

If you’re into crypto and like to hear two sides of the story, be sure to also check out our previous episodes. We’ve featured some of the best known thinkers in the crypto space.

If you would like to debate or want to nominate someone, please DM me at @blockdebate on Twitter.

Please note that nothing in our podcast should be construed as financial advice.

Source of select items discussed in the debate:

- Coindesk's layman guide for rollups: https://www.coindesk.com/ethereum-dapps-rollups-heres-why

- Alex Gluchowski on the difference between the two rollups: https://medium.com/matter-labs/optimistic-vs-zk-rollup-deep-dive-ea141e71e075

- John Adler explains optimistic rollup: https://medium.com/@adlerjohn/the-why-s-of-optimistic-rollup-7c6a22cbb61a

- Celestia: https://celestia.org/

- Fuel Labs: https://fuel.sh/

- Matter Labs: https://matter-labs.io/

Debater bios:

Alex Gluchowski is co-founder of Matter Labs, currently working on scaling Ethereum with zkSNARKs. He was previously CTO of PaulCamper, an online platform for sharing campervans and caravans in Europe.

John Adler is co-founder of Celestia and Fuel Labs. He is the original proposer of the optimistic rollup construction. He previously did layer-2 research at ConsenSys. Interestingly, he is a self-proclaimed blockchain skeptic.

James Prestwich founded cross-chain solution company Summa, subsequently acquired by the layer-1 blockchain firm Celo. He previously founded Storj, a decentralized cloud storage provider.

The Blockchain Debate Podcast - Rollups

Richard: [00:00:00] Welcome to another episode of the Blockchain Debate Podcast, where consensus is optional, but proof of thought is required. I'm your host Richard Yan. Today's motion is: ZK roll-up has a better set of security/scalability trade-off than optimistic roll-up. Roll-ups are a class of layer-2 Ethereum scalability solutions, they allow an off-chain aggregation of transactions inside a smart contract. Users can transact inside the contract with security guarantees and they will settle to the main chain at some future point.

[00:00:40] So ZK and optimistic roll-ups are different in the way they ensure the validity of these transactions that are being kept off-chain. The ZK approach uses math. It bundles the transactions, compresses them, and adds a zero-knowledge proof that indicates the validity of the state transitions. When the transaction is sent to the main chain, the block is verified by the attached zero-knowledge proof.

[00:01:05] The optimistic approach uses economic incentives. So an operator publishes a state root that isn't constantly checked by the roll-up smart contract. Instead, everybody hopes that the state transition is correct. Now other operators or users can challenge the validity of the transactions, revert the incorrect block and slash malicious operators.

[00:01:27] We compared the two approaches from the standpoint of security, usability, capital efficiency of exits, and more. Today's debaters are John Adler and Alex Gluchowski. John is the proposer of the original construction of the optimistic roll-up. And Alex is implementing a ZK roll-up at matter labs. Our co-host James Prestwich is a security consultant and auditor for solidity contracts, among many other things.

[00:01:55] If you're into crypto and like to hear two sides of the story, be sure to also check out our previous episodes. We featured some of the best-known thinkers in the space. If you would like to debate or want to nominate someone, please DM me @blockdebate on Twitter. Please note that nothing in our podcast should be construed as financial advice. I hope you enjoy listening to this debate. Let's dive right in.

[00:02:16] Welcome to the debate. Consensus optional, proof of thought required. I'm your host. Richard Yan. Today's motion: ZK rollup has a better set of security/scalability trade-off than an optimistic rollup. To my metaphorical left is Alex Gluchowski, arguing for the motion. He agrees that ZK rollup has a better set of security/scalability trade-off than an optimistic rollup. To my metaphorical right. Is John Adler, arguing against the motion. He disagrees that the ZK rollup has a better set of security/scalability trade-off than an optimistic rollup. My metaphorical middle is James Prestwich, who will be co-hosting this debate. I'm super excited to have you on the show. Welcome.

[00:02:58] James: [00:02:58] It's great to be here.

[00:02:59] Alex: [00:02:59] Hello.

[00:03:01] Richard: [00:03:01] Here's a bio for the two debaters and our cohost. Alex Gluchowski is co-founder of Matter Labs, currently working on scaling Ethereum with ZK-SNARKS. He was previously CTO of PaulCamper, an online platform for sharing campervans and caravans in Europe. John Adler is co-founder of LazyLedger and Fuel labs. He is the original proposer of the optimistic rollup construction. He previously did layer two research of consensus. Interestingly, he is a self-proclaimed blockchain skeptic. James Prestwich founded cross-chain solution company Summa, subsequently acquired by the layer one blockchain firm Celo. He previously founded Storj, a decentralized cloud storage provider.

[00:03:42] We normally have three rounds, opening statements, host questions, and audience questions, but there are so many audience questions that we're incorporating them into the host questions. And James will be doing the heavy lifting during the host questions round. Currently, our Twitter poll shows that 46% agree with the motion and 21% disagree with the motion.

[00:04:02] After the release of this recording, we'll also have a post-debate poll. Between the two polls, the debater with a bigger change in percentage votes in his or her favor wins the debate. Okay, Alex, please go ahead and get started with your opening statement, and please make sure to include an introduction of the ZK rollup in your opening statement.

[00:04:23] Go ahead, Alex.

[00:04:25] Alex: [00:04:25] Sure. Thank you, Richard. ZK rollup is the most exciting scaling technology I'm familiar with because unlike all other scaling technologies. It actually does scaling. So, with the application of heavy math, zero-knowledge proofs a very interesting family of cryptographic protocols, we can achieve a compression of computation so we can perform, a generation of proof of integrity, of a very large algorithm, which can encompass as much as all financial transactions in the world.

[00:05:03] Which you can verify from your mobile phone is in 30 milliseconds. So this is a technological breakthrough, which will bring, in my opinion, very soon, mainstream adoption to all public decentralized blockchains. This is actually the only technology which can do it without making the trade-offs on the infamous scalability trilemma.

[00:05:27] And I would go as far as to say that the statement should be even harsher. I would say that ZK rollups are superior technologically in practically every dimension on every metric compared to an optimistic rollup. So there's been some things in the past where optimistic rollups were better.

[00:05:49] But this is currently not the case anymore because we had tremendous progress on zero-knowledge proofs in general. And ZK rollups specifically, over the course of the past two years, especially this year. And, this is going to be a very interesting development to watch because, potentially by the time optimistic roll-ups are ready for production, they might just be an obsolete technology.

[00:06:15] Richard: [00:06:15] So John, please give your opening statement and make sure to include an introduction of the optimistic rollup as well.

[00:06:22] John: [00:06:22] Thanks, Richard. Thanks, Alex. I will try to do my best here to also briefly explain ZK rollups, before talking about optimistic rollups. but for the opening statement, optimistic rollups are probably, in my opinion, the second most exciting avenue or direction for scaling blockchains today.

[00:06:42] The first is not ZK rollups, spoiler alert. They allow us to essentially get most of the benefits of sharding, which you may have heard is being proposed on systems like serenity, sometimes called ETH 2, and other chains like Polkadot and Near, and so on, are proposing to do sharded execution.

[00:07:03] Optimistic rollups essentially allow us to get all the benefits of that, without having to wait for full-blown sharding at the base layer. We can do it substantially sooner, within, on the order of months, not years, as the meme would go. And it allows us to do this kind of sharding with relatively few constraints.

[00:07:24] The only constraint currently for optimistic rollups that are built on top of Ethereum is that you need to be able to verify a fraud-proof in the EVM, which in some ways is actually a fairly big constraint, but in theory, you're relatively unconstrained in what you can do. And this is as opposed to, when you use zero-knowledge proofs, you generally have some pretty strong constraints on what specific type of execution model you can have, although I'm sure we're going to discuss this point further into the call. A brief overview of how ZK rollups and optimistic rollups work, ZK rollups being substantially simpler. So I'll start with those, essentially in a ZK rollup, you collect a bunch of transactions that would go to your rollup.

[00:08:08] You create a zero-knowledge validity proof that, yes, these transactions are valid and they cause a valid state change from a previous date to a next state. And then you post all of this data, onto an Ethereum smart contract. Since you're using validity proof, you don't have to worry about any rollbacks or anything like that.

[00:08:28] Just as soon as, as soon as the contract sees this blob of transactions, it verifies the proof which it can do in exponentially lower time. And then you just immediately, immediately know it's valid. Optimistic rollups are a bit more complicated. So, the way fraud proofs work is that you see a claim and then if the claim has not been disputed after some time out, then you consider that claim valid, is how fraud proofs work in general. So in the context of rollups, you have someone create a block. Post that block to an Ethereum contract. And then if a fraud-proof has not been posted against that block for some timeout, then you consider the block valid. So there is a kind of higher latency before the contract can consider the block valid.

[00:09:16] And there's, beyond this, you can also have optimistic rollups with interactive verification games, which we won't really cover here, but that's the high-level description of both ZK and optimistic rollups.

[00:09:26] Richard: [00:09:26] Great. Thank you for the opening statements. Let's move on to round two, which is a bit of a free for all. The host will be asking questions and James will be doing most of the questions from this point on. James, do you want to take over?

[00:09:42] James: [00:09:42] Yeah, definitely. Thanks, Richard. I think we have a good overview of the different technologies from the opening statements. And I want to start with an audience question from Mark Tyneway, who is an engineer at Optimism, and Mark asks for each debater, what is the best part of your opponent's technology?

[00:10:01] Let's start with, Alex.

[00:10:03] Alex: [00:10:03] yeah, I think that the best part of optimistic rollups is actually this optimist, setting, an optimism, mood, which it was, radiating for all the last year, because it kept the community hopeful that the solution for Ethereum problems will be there while ZK rollups, was, in this kind of R&D discovery mode.

[00:10:26] How do we solve for the generic programmability? It was not very clear how quickly this can happen. Whereas optimistic rollups offered a relatively straightforward solution. And I think it was very beneficial for the entire community to just have this certainty that at least some solution will be there in the near term.

[00:10:47] James: [00:10:47] Interesting. John?

[00:10:48] John: [00:10:48] Sure. I like Alex's point that you know what, scaling optimistic rollups allowed us to believe that scaling was right around the corner because that's supposedly sharding, and Serenity was supposed to be also around the corner. But more to the point.

[00:11:04] I think one of the best parts, there are two really interesting things about ZK rollups the first is that you can prove that a computation was valid, using exponentially less work, which is very useful if you were doing a very large amount of computation and a very small amount of state transitions.

[00:11:23] The second thing that ZK rollups are good at. And, validity proofs, in general, are low-latency life clients. And if you consider the rollup contract, that's on-chain as a type of light client in some ways, because it doesn't execute transactions, and means that you can have light clients with good security guarantees with the latency, which allows you to do things like atomic composable transactions between a ZK rollup and a contract and layer one.

[00:11:52] And it allows you to quickly withdraw non-liquid tokens.

[00:11:57] James: [00:11:57] Interesting. So you would say that one of the primary benefits of ZK rollups is the low latency and how it interacts with other contracts on layer one.

[00:12:05] John: [00:12:05] I would say, low latency is a loaded term. Latency, once you post a ZK roller block is, because there's zero block latency between being able to post a ZK rollup block and being able to withdraw funds, you can do it on the same block, while with optimistic rollups, you probably would need at least one block of latency, which means that you can't do something like, I dunno, flash loan or something between the two systems, which you probably could do with, a lot of work between ZK rollup and then on the main layer-one chain.

[00:12:36] James: [00:12:36] Yeah. I think speaking of latency and flash loans and atomic transactions lead into another audience question, @leanthebean wanted to hear about finality in the rollup. How long does it take optimistic rollup to achieve finality from a user perspective and how does it achieve that?

[00:12:55] John: [00:12:55] Sure. So I guess this question is directed at me first. Finality is also a loaded term, unfortunately,

[00:13:02] like the word better.

[00:13:03] James: [00:13:03] Are there any non-loaded terms?

[00:13:05]John: [00:13:05] It's less about loaded versus unloaded as it is, let's try and break it down into its fundamental components. If you're okay with that. Sure. So in terms of, finality, first of all, how would we define tonality?

[00:13:16] It's the time it takes before a transaction, you can be guaranteed that it's not going to be reverted.

[00:13:23] James: [00:13:23] With negligible probability.

[00:13:25] John: [00:13:25] Yeah. And, Bitcoin is probabilistic finality, which is, you add some probabilities there under certain assumptions. Under the assumption that the majority of hash rate or validators are honest, then you know, the time that after this time you can be ensured that this transaction and once it's included on-chain, won't be reverted. So we, you also have to break this down in terms of latency to first confirmation. Unlike channels which have immediate or instant finality, as soon as you get some consensus on a state update and the channel, rollups need to post their blocks on-chain. So a rollup by itself will always be at least as slow as the main chain. You can't have 0.4-second confirmations and a rollup. You can do all their stuff. having bonded aggregators, or, put up some bonds to say that they're going to have a faster finality.

[00:14:16] But it's not really a finality. It's more there's some economics there and once you introduce these economics and it's complicated, so, you know, we kind of have to consider two things. The first is what is the latency to first confirmation for a transaction in an optimistic rollup. And the second is, what is the time to finality once it's on-chain? So yeah. The latency to first confirmation for an optimistic rollup is basically the first Ethereum block that's going to be mined. there are no proofs to generate. As soon as an aggregator collects an optimistic rollup block, they can put it on-chain. So latency, the first confirmation is around six seconds with no further caveats.

[00:14:51] Other than that, there should be a sufficient transaction through, but to a marriage posting an optimistic rollup block, every six seconds or 12 seconds. The time to finality after it's posted on-chain is immediate. It's instant. As soon as a valid, optimistic rollup block has been posted on-chain, it is finalized. It will not be reverted so long as it is valid, and this is distinct from the withdrawal latency. The withdrawal latency is not part of finality, it is something different and the withdrawal latency for an optimistic rollup, you want it to be on the order of one to two weeks or some long period of real-time, which I'm sure we'll cover why shortly in this call, that you want this to be some long period of human-readable time, something that's at some period of time that humans can interpret as opposed to, just on the order of seconds. So that's the three facets. So a very short time to first confirmation. Immediately final as soon as it's posted on-chain, assuming it's valid and, the withdrawal latency is on the order of one to two weeks.

[00:15:53]James: [00:15:53] Alex, do you think that is a fair assessment of optimistic rollups time to user subjective fidelity?

[00:15:59] Alex: [00:15:59] I don't think that time to first confirmation, does any meaningful difference because you can have the same thing with ZK rollups and we have to look at the finality from the point of view of users. And I think the majority of users would define finality as the time, the point of time where transactions reach the same in-reversibility guarantees as though the transaction actually happens on the underlying layer one.

[00:16:31] And John is right that the transaction cannot be reverted. But, from the point of view of layer one, it's not the case. So he refers to this property as withdrawal finality, but it's not just withdrawals. It's any message, which is best from optimistic rollup to layer one. And, also from the point of view of light clients, which John previously correctly pointed out as being one of the foundations of ZK rollups. So for light clients, the finality, actually the observable finality equals to the layer one finality, which is going to be this long period of time of one or two weeks, which most researchers consider to be like a minimum security threshold.

[00:17:18] James: [00:17:18] John also mentioned a kind of interesting property is that a block cannot be reverted, assuming it is valid. Is the contrapositive true for optimistic rollups? John, can an invalid block be accepted?

[00:17:33] John: [00:17:33] An invalid block can be accepted if there is no fraud-proof posted on-chain

[00:17:39] James: [00:17:39] Okay.

[00:17:39] John: [00:17:39] Two weeks

[00:17:40] James: [00:17:40] Alex, is it possible for a ZK rollup contract to accept an invalid block?

[00:17:47] Alex: [00:17:47] It is not possible because every block in ZK rollups requires a corresponding and validity proof. So they stay transitional will simply not happen unless the block producer provides easier knowledge proof that this block has been vetted.

[00:18:03] James: [00:18:03] Interesting. So to kind of recap, the finality of a rollup should be measured in the underlying layer ones' finalization speed, plus, for optimistic rollups, this fraud proving period, because the optimistic rollup can accept an invalid block, you have to wait for that period to see if something will be finalized. Is that pretty reasonable, John?

[00:18:26] John: [00:18:26] Not exactly, the reason being is that you can, in fact, distribute fraud proofs in the peer to peer network and distributing fraud proofs in the peer to peer network, you can assume much tighter, timeouts, because you don't have to get consensus on this really. So a light client, like a light client of an optimistic rollup doesn't have to wait. Whatever timeout on-chain, before it accepts or rejects a block, it can consider locally a block being valid or invalid much faster by relying on, peer-to-peer distributed, fraud, proofs, or lack thereof.

[00:19:02] James: [00:19:02] By relying on the existence of some honest node out there that it

[00:19:07] John: [00:19:07] Yes. It's a light client, so yes, if you want to have secure light clients, then it's either you wait for a really long timeout at which point it's not really a light client, is more like some super-light clients or something, or, you have the standard secure light client assumption, which is there's one honest node out there.

[00:19:23] James: [00:19:23] Alex. You were going to say something.

[00:19:25] Alex: [00:19:25] I would strongly disagree because you cannot rely on the absence of evidence, and take it for the evidence of absence of something, because you can be eclipsed with, your peer-to-peer topology and you will just not be able to get the fraud proofs from the network or the network could be segmented.

[00:19:44] And then the fraud groups will not reach other parts. So you cannot say that because I haven't observed anything in my local peer to peer network, that means that, there is no fraud-proof happening somewhere or that there is no, no valid transaction because another reason why there could be an invalid transaction and you don't see any fraud-proof is because nobody actually observed it.

[00:20:07] So in optimistic rollups you rely on one honest participant who monitors the network at all times. but who's actually going to do this. What's the incentive mechanism for people to watch so my understanding is that the only people who would be incentivized to actually monitor the network, and check the proofs would be the validators or block producers of this network who produced the blocks.

[00:20:31] Because they built on the blocks of other block producers. And should any of the previous blocks be invalid they would be slashed, or they would not get the rewards they were hoping for. So they have an incentive for this, but if all of them collude, or if all of them are disabled in other ways, if they're being hacked, if they get some wrong version of software installed or maybe their DevOps teams get socially engineered and collude, then they, the fraud generating software will just be disabled and no fraud proofs will be broadcast. So you can, as a light client, you can't really rely on the absence of something like until the time passes and you can check what is actually my root state hash on Ethereum.

[00:21:23] James: [00:21:23] It seems like that argument could apply directly to the on-chain contracts, running the optimistic rollup. Why can the contract rely on, absence of evidence in a certain timeout, while the light client can't? Either person, whoever wants to jump in first.

[00:21:39] John...

[00:21:39] Alex: [00:21:39] I think

[00:21:40] it's

[00:21:40] you.

[00:21:41] John: [00:21:41]

[00:21:41] Alex was, Alex is the one who initially made the claim that you can't rely on the absence of evidence.

[00:21:46] Alex: [00:21:46] Well. Okay.

[00:21:47] I can,

[00:21:48] John: [00:21:48] Go ahead, you can start.

[00:21:50] Alex: [00:21:50] So for the finality and optimistic rollups, is. Most importantly, for communication to light clients. And, so essentially what it means when the block is final is that you can access it on layer one. So the state of optimistic rollup is some extension of, layer one as is the case with ZK rollups, it's a layer 1.2 solution where you're actually rooted on the main chain. So once you, so the finality. In fact, as a light client, you can be sure that you can access this money, if there were, no invalid blocks during this period of time. No one will be able to revert them. Even if they were invited blocks. But your particular account was not affected by them. The time window for posting the fraud proofs is over.

[00:22:41] So your state, which is now finalized by the passing of the time period is immutable. And you will be able by observing further, assuming that the further operational optimistic rollup remains, correct. You will be able to take this money and exit on Layer-1's, sometime in the future. So even if there was a problem you won't be affected, and this is the meaning of finality from the point of view of a light client.

[00:23:08] James: [00:23:08] Okay. Interesting. I guess my observation here is that the on-chain contracts, subjective timeout after which it finalizes optimistic rollup blocks, is functionally equivalent to an off-chain light client's subjective timeout. It doesn't seem that there is a technical difference between those two things.

[00:23:30] Alex: [00:23:30] I think one technical difference is that you can actually not submit any fraud proofs for blocks, which are earlier than the spirit.

[00:23:38] James: [00:23:38] You can't submit any of them to the contract, but you could still submit them to other peers on the peer-to-peer network.

[00:23:44] Alex: [00:23:44] But they would not be able to do anything with them. So this would be irrelevant information. Just some past deeds, let's let bygones be bygones.

[00:23:55] James: [00:23:55] Okay. All right. So moving on from finalization a little bit, cause we've spent a lot of time on it so far. What do you think users actually care about with a rollup? what do you think will drive them to use your rollup? Let's start with John this time.

[00:24:10] John: [00:24:10] By "your rollup," do you mean specifically the rollups, the kind of designs or implementations that we're working on, or optimistic / ZK

[00:24:20] James: [00:24:20] I'd like to talk about the specific designs you're working on right now.

[00:24:23] John: [00:24:23] Alright. So in terms of what users care most, I would say that I personally have no clue whatsoever. Lots of people say they want certain things and then they don't actually. And then lots of people say they don't want certain things, but really they do. I have no idea what blockchain users want versus what degens want versus what average users that currently using blockchains want is up in the air.

[00:24:51] If any of us, if any of us knew specifically exactly what people really wanted deep down, then we would probably be very rich and we wouldn't be having this discussion. But in terms of, what we see, so I don't know what people really want, but in terms of what do we see, it seems to me, at least in the Ethereum space that there's been an increasing, use or acceptance of, decentralized finance or open finance protocols, that allow you to do certain financial things in a way that is permissionless than some way, trustless in some other ways to varying degrees, as a kind of alternative to traditional financial instruments, which I think is particularly interesting. how will we get there and how useful this particular DeFi movement will be, I don't know. I guess we'll find out.

[00:25:42] Now in terms of what will drive people to the rollup that I'm in particular working on, which is fuel from fuel labs. So to give a brief one-sentence overview is that we're using a UTXO based data model that allows for much higher transaction throughput on the same hardware.

[00:26:00] And we're primarily focused at least initially on payments, basic payment applications. And then we are subsequently working on Ethereum style smart contracts and in the next year or two, so why would people want to use our rollup, I think the answer is obvious, at least compared to other optimistic rollups, we offer by far the cheapest and most scalable way of doing value transfer.

[00:26:25] There is basically no, like there's no, not even remote comparison between the scalability, and performance of fuel versus other optimistic rollups. So in that sense, if people want to transfer value. I moved money between exchanges, which USDT up until fairly recently, was the biggest gas guzzler on Ethereum. Now I think it might be some other DeFi contracts if you just want to move money between exchanges if you want to buy goods and services and so on, you just want to move a value around. Maybe it was some basic conditions. Fuel is basically unmatched in terms of other optimistic rollups.

[00:26:59] James: [00:26:59] And Alex, same question. What do you think users care about and what will drive them to ZKsync?

[00:27:05] Alex: [00:27:05] I think that the users in Ethereum simply want to scale. So we are solving a problem. And the problem is that the gas prices are high, and the throughput of blocks is limited and we are expecting much broader adoption of these blockchain technologies and more, many more users. So what people are hoping for is to be able to just do whatever they do on the Mainnet, at a much lower cost and much higher throughput, they want to do it without giving up any of the properties, which make the Mainnet Ethereum valuable for them. So that means not giving up usability and most importantly, not giving up security. And this is where ZK rollup absolutely shines because ... and by the way, the what, why the statement is important. Why people actually would prefer ZK rollup over other solutions. We see that there are other solutions that offer inferior security properties or inferior usability, such as side chains, and then not getting a lot of adoption.

[00:28:13] Everyone is still waiting and people would rather keep their contracts and protocols and users on the Mainnet and pay high fees than to move billions of dollars on the side chains. So security really is important. And the cool thing about ZK rollup is that cryptographic assumptions aside, you can get exactly the same security guarantees as layer one. So I call it basic security, basic with regard to the ZK rollup protocol itself. So no one needs to do any work to keep the system secure. You can put money there, disappear for a year. All right. Meanwhile, you don't care whether there were any validators, other users, any users at all, you come back and you can be sure that you will get your assets back no matter what.

[00:29:07] And this is unique. Any other scaling solution requires some additional work from some additional actors. Which has an active duty to be performed to keep the system secure. And, this, security properties still have limitations. And normally they limit, the guarantee is severely compared to the Mainnet.

[00:29:30] For example, in the case of optimistic rollups, you cannot really, with one week delay, you cannot put more than around $50 million in a single optimistic rollup instance. Because you have some specific effect, vectors connected to a 51% hash rate attack on the Mainnet, which can just steal all the money from a rollup.

[00:29:57] And the cost of this attack is lower than some dozens of millions of dollars. And the practical cost is actually very low, almost negligible. ZK rollup, you can put billions and it's going to be just. As secure as layer-one, of course, you can still make some, probably some bugs in the contracts, or bugs in your zero-knowledge circuits, or implementations of cryptography.

[00:30:22] But this is something which is, first of all, it affects old protocols. And second of all, it's going to be subject to very strict review and audits and, proving. So it's very different from this ongoing game-theoretical incentivize.

[00:30:37] James: [00:30:37] So I want to dig into that a little more. You said that even if there are no validators on the rollup while you're offline, it will still be secure when you get back. In that case, what does the validator do? What's the function that runs it? Is it decentralized?

[00:30:53] Alex: [00:30:53] To produce blocks, the validators and block producers are synonyms here. So you need someone to perform work to, to keep their rollup active, live, to produce new blocks, and to actually scale operations. But if the block producers disappear, you cannot progress. So you cannot have this cheap transaction going on forward, but you can always get your money back.

[00:31:17] James: [00:31:17] Okay. When you say block production, presumably you mean also creating the proof for that block? How much does it cost to create that proof? How much does it cost to run the validator right now?

[00:31:29] Alex: [00:31:29] So, the costs of the proofs amortize the transaction is actually negligible compared to the costs of the on-chain gas part, for rollup transactions. So for a simple transfer, for example, right now we're paying something like 0.01 cents per transfer, through generation costs.

[00:31:55] And we're using common cloud services like AWS or Google cloud platform. And the way it works this efficiently is that we only need these cloud instances to produce a block. So once we have a block, we can spin off a new instance, let it produce the proof, and then we shut it down. All happen on-demand and this can be done by anyone by any validator.

[00:32:24] James: [00:32:24] Interesting. Jumping back a little bit. John, you said that fuel is running on UTXOs, and Alex's ZK sync is only payments so far, as far as I'm aware, it seems neither of these systems are handling smart contracts right now. And some of the underlying models here, UTXOs, and validity proofs make it difficult to have arbitrary computations with state.

[00:32:51] Right. That makes it difficult to make the kinds of complex DeFi apps used on Ethereum today. John first, do you think that's a fair assessment and does fuel intend to support these things in the future?

[00:33:03] John: [00:33:03] I would say that's a fair assessment, at fuel that we don't currently support, any, anything that you would call a smart contract unless you call an ACLC a smart contract. But in the future, we are planning on supporting both Bitcoin-style predicates and it's full, fully-featured Ethereum style smart contracts with a rich state that carries over between transactions.

[00:33:27] and I wrote a post about how this can be done in the UTXO data model, that you can find on talk.lazyledger.org, I believe there's a post that I wrote up on how, how this can be done to the UTXO data model, and that's the architecture that we're planning on using for fuel within the next couple of years.

[00:33:46]James: [00:33:46] Would you say this is one of the major things missing from fuel right now? I've noticed that fuel is not on the Mainnet yet. Is this one of the things blocking it?

[00:33:55] John: [00:33:55] Well you asked who questions, to your first question on, do I think this is missing from fuel? the answer is yes or no in terms of, I think this is missing from a kind of final version of what I would want to see in terms of an optimistic rollup, and in terms of fuel? But is it missing from the current version of fuel?

[00:34:17] The answer is no. The current version of fuel is actually undergoing some final security audits. So it should be on Mainnet before the end of the year.

[00:34:25] James: [00:34:25] Oh, that's great. As a kind of general question, do you think, it feels like optimistic solutions have in general lagged, ZK solutions in deployment? Do you think there's a reason for that, or is it just a coincidence, or timing issues?

[00:34:40] John: [00:34:40] I would say that there is a good coincidence and timing issue is our reasons. But is there a reason for that? The answer has to be yes, there has to be a reason for why it seems that, ZK, I mean ZKsync is on Mainnet, my, Loopring's, what, whatever they call it, Loopring, I guess, is also on Mainnet.

[00:35:01] And I think other others, ZK rollups are approaching, and ZK rollups have been developed at a pretty astounding pace. So you might think, why is it that people have built these systems with all this advanced cryptography? People haven't built the systems without the advanced cryptography where these systems, as optimistic rollups, why haven't these been launched yet?

[00:35:21] Why are they still under active development? why do they seem to not have so many features at this moment? I think the reason being, and I might be a bit biased here, I might not basically the whole picture, but at least from where I stand and from what I've seen, I think a lot of the early thoughts around ZK rollups after they were introduced by Barry Whitehat and then, Vitalik Buterin, extended them a bit, a lot of the early thought around that were that A, ZK rollups work because of the zero-knowledge proofs, and B the zero-knowledge proofs were a form of compression.

[00:35:57] And I think both of these things are, the first statement is obviously wrong. And the second thing, it depends on how you define compression, but I wouldn't call this compression. And in fact, there's a big pet peeve of mine. When anyone calls a rollup or a validity proof, a compression because you can't uncompress.

[00:36:12] It's like a one-way function. It's like calling a hash function or compression, which you can't, uncompress the hash function really. So there was a lot of the way people were thinking about ZK rollups when they were first introduced, was that it worked because of the zero-knowledge proof.

[00:36:26] And if you search for the word fraud inside the Vitalik Buterin's post on ETH research, is now famous, 500 transactions per second, whatever posts, which extended the ZK rollup that was introduced by Barry Whitehat. that one there if you search for the word fraud in that post, you'll find zero instances of it. So no one was thinking about fraud proofs at the time, because people thought that it worked because of the zero-knowledge proof, and not rollups, can work, you just replaced the zero-knowledge proof with fraud-proof, plus a timeout. And then maybe the property has changed and stuff, but no one thought this was even possible.

[00:36:59] Like no one even thought of it, until I came along. So because of this. because of this initial thought that, do you have zero-knowledge proofs while no one developed optimistic rollups because everyone just thought that this is the only way to do it. After I published my post on optimistic rollups or do you know, describing the technique that is now known as optimistic rollups then, various teams caught on and so on and people started building it out, but it took a while for that, that mentality. It's a shift, essentially. It was just a mentality shift. Does that answer your question?

[00:37:31] James: [00:37:31] Yeah, I think it definitely does. It's really interesting to me in light of our earlier conversation about optimistic rollups keeping people optimistic about scaling solutions for the last year is that we essentially said that the solution invented second and deployed second is what got people excited about the original solution.

[00:37:50] John: [00:37:50] Yeah, I think that's a, it's an interesting way things happen that I'm sure that when people write history books about this if they ever do God forbid, then, it'll be an interesting story.

[00:37:59] James: [00:37:59] Those poor historians. So jumping back to the original question that got us off on this tangent, Alex, my understanding is that ZK sync today does not have support for smart contracts and that smart contracts are driving the majority of usage of Ethereum right now. I believe that you have plans for adding those, are those coming in V2 and how do you intend to achieve that? And the validity proof model?

[00:38:25] Alex: [00:38:25] So they have come in V2 and we actually have smart contracts already live on Testnet. We had a test integration of the curve on ZK-sync, which anybody can go and check out. We have the code for it. [inaudible], you can check the contracts [inaudible]. And, our plan is actually now going much further. So the reason we don't have a smart contract in production yet is because there was a big blocker, which did not allow us to implement contracts earlier.

[00:38:57] And that was recursion. You needed recursive composition of zero-knowledge proofs because that is one of the ways you can do the heterogeneous computation, the blocks, not only of, similar transactions to just transfers, just repeated a number of times. But that you can mix up different transactions of different weights with different, heavy operations, a different number of storage reads and writes, and so on.

[00:39:27] The way to do this, would be to build something like universal circuits. We just blow up the number of constraints and make the computation very expensive. And this is why people assumed for a long time that building smart contracts on ZK rollups would be infeasible, but recursion changes all of this.

[00:39:48] This is a complete game-changer and we just implemented it this summer. We used it for the Reddit demo because recursion also gives us a significant boost of, the compression, of computation. Now we're going to use it for building actually fully compatible, solidity smart contracts.

[00:40:11] So you will be able to take any existing contract from Ethereum in solidity and probably also in Viper and just port it on the sync. Use it there with minimum or, no, modifications. Just to come back to John's point that ZK rollups are not all for compression because it cannot uncompress a hash.

[00:40:33] This is actually not entirely true because you can uncompress a root hash. If you have a Merkle tree, you can always show that you have some balance and you just unpack the Merkle roots, to do the Merkle path to the root. This way, you've compressed the hash and this is exactly what's being done with the solutions.

[00:40:55] We store the root hash on contract on Ethereum. And we have this complex validity proof that shows that it actually corresponds to a valid state off-chain. And then any user who knows their account data can prove their ownership and take the money back to the Mainnet.

[00:41:13] James: [00:41:13] So you said that the contracts will be written in solidity and we'll run directly on ZK

[00:41:20] But you, this is to my understanding, not going to be using EVM bytecode running on ZK sync. Is that correct?

[00:41:28] Alex: [00:41:29] Yes, this is correct. You don't need IBM to buy coats. What you need is that your source codes correspond to the like you take the logic from the source code, and this logic is enforced. So you don't have to have exactly the same bytecodes because optimistic rollups also don't do this. So from what I know about optimism solutions, for example, or [inaudible], they have their own virtual machines where they trans compile the bytecodes into something different, which is easier to process on Ethereum. So it's not exactly one-to-one bytecode, but you take your existing code. You can read the code and you can audit it, you know what exactly it's doing. You have all your types. Then you have some logic encoded in solidity, with some constraints, with all of the logic you have, storage checks, and so on, and this logic will be executed just the way you have it on solidity.

[00:42:30] James: [00:42:30] Help me build some intuition about this. Will each of these contracts require a new circuit?

[00:42:36] Alex: [00:42:36] The contracts will require a new proof. So this is a little bit of magic, which is going into the version which we're building. So I would not go into much detail about how exactly we do this. but, essentially every contract execution will be

[00:42:57] James: [00:42:57] So each contract execution results in a new proof, all of those proofs are put under the single validity proof that is posted on-chain. Is that correct?

[00:43:07] Alex: [00:43:07] This is correct. And moreover, we will have the only properties from solidity, including composability. So you will be able to call other contracts and they will return values, and they can call other contracts in turn, and you can have recursion.

[00:43:24] James: [00:43:24] Interesting. How does this compare to Zexe for example?

[00:43:29] Alex: [00:43:29] Zexe is focused on privacy and that's why they are using very different architectural approaches based on predicates. To port, your product to Zexe would require you to reengineer them entirely to fit into this architecture. And our decision was from the beginning to make it as close to the Ethereum architecture as possible. For example, all accounts in ZKsync have the standard Ethereum addresses.

[00:43:56] So you can actually send tokens now in ZKsync, which is in production, to any Ethereum address, even to smart contracts. And, it will work. It will just create a new account in ZKsync with this address. And then the recipient can always get this to layer one, even if, even if they cannot register, they cannot pass the message to ZKsync.

[00:44:19] So this is just one example. We tried to design ZKsync completely, very close to Ethereum, following the same account models, following the same computational model, making signing simple. And the same thing will continue with solidity. With solidity, our plan is to have one-to-one portability of all the existing codes with just very few exceptions.

[00:44:41] So you probably wouldn't be able to execute, very complex cryptography, do some pairing proofs inside, in ZKsync, but for that, you will be able to have specialized circuits and specialized conference, which will replace Ethereum and compile, which we cannot natively support.

[00:45:00] James: [00:45:00] Yeah, definitely. I feel like we've gotten pretty deep into the weeds on ZKsync. And I want to pull back out to a little bit of a higher-level question for both of you. and I'm going to start with John again. John, what failure cases for optimistic rollup and fuel, in particular, are you worried about?

[00:45:19] John: [00:45:19] Failure cases, in terms of

[00:45:22] James: [00:45:22] Well, what, what can go wrong, and what happens to users? When things go wrong?

[00:45:28] John: [00:45:28] Okay, so what can go wrong? Is. And, in an optimistic role, if a fraud-proof isn't generated within the timeout period, then users can lose funds. Users can have their funds stolen. That's one thing that can go wrong. Another thing that can go wrong is there could be a smart contract bug. And if there's a smart contract bug, this could either prevent a fraud-proof from being generated, or it could allow a fraud-proof to be generated when it shouldn't be.

[00:45:57] And this could either, allow theft of user funds, or it could potentially lock up the chain forever. The latter case being the least likely because it's a little bit tricky to have a fraud-proof that can be generated all the time to make every block fraudulent.

[00:46:15] It's pretty tough, I think, to get into that situation. so those are the worst case, failure modes. The one thing that we've explicitly tried to avoid is user funds being locked. Simply due to lack of computational resources, one unfortunate thing that people have advertised optimistic rollups as being able to do in addition to weird stuff like faster confirmation times, one thing is they've advertised optimistic rollups as being able to process more than 15 transactions per second.

[00:46:50] If they run the EVM as their execution system on the same hardware, or, admitting the hardware requirements, to do this, you've probably seen numbers out there, in the order of 500 or a thousand or even 10,000 and some ridiculous numbers like this, and implied in a single EVM optimistic rollups, with no kind of consideration of the hardware requirements that you would need to do this.

[00:47:13] A single system with a global state processing 10,000 transactions per second would require a very powerful computer, to handle this. So those kinds of one, one failure mode that we have tried very hard to explicitly avoid at fuel. And we made various compromises to achieve this.

[00:47:30] But it means that you can process many more transactions per second you can on Ethereum, on fuel. And unfortunately, this does mean that the system had to be assumptive engineered from scratch, in a way that allows, transaction execution. With, less work per transaction.

[00:47:48] So this is achieved both by adding para parallelism to transaction verification, and having a better state access model. And because of this, that means that the fuel chain or new users a fuel don't have to be worried about not being able to, eventually, being able to withdraw their funds, because they can't possibly catch up to the chain because it's processing so many transactions, so they don't have to worry about this failure case.

[00:48:13] So in terms of the other failure cases, you know, what happens if someone creates an invalid block and, fraud-proof isn't posted. I'm not really worried about that. The reason being is that in the protocol, there's one actor as Alex pointed out, earlier, there's one actor in the protocol that has an incentive, to validate blocks fully. And that's the block proposers, but extra-protocol, there are actually a few other actors, that I believe will more than make up for the lack of in-protocol actors and that's, users that want to be able to accept the blocks as valid quickly without having to rely on anyone else.

[00:48:47] Which is again, we talked about Finale and stuff and withdrawal latency and whatnot earlier. But essentially it means that if they want to be able to accept an optimistic rollup as a block is valid. For example, if it's an exchange, accepting a fuel block, the fuel deposits from fuel directly, they would probably run a full node for fuel, because they don't want to wait two weeks. They want to accept it immediately. And they have all the incentive in the world to run a full node. The other class of actors that I expect to run full nodes, and they have a financial incentive to do so, even if it's not in the protocol, is liquidity providers for faster withdrawals.

[00:49:23] So optimistic rollups natively have a two week let's say, some parameter there, withdrawal latency, but you don't have to wait those two weeks. You can actually alleviate that, by trading or exchanging time for money. You can actually use cross-train and atomic swaps in this case, between the rollup and say, Ethereum, using things like HTLCs to immediately withdraw funds within a few minutes from the rollup to back to layer one, by just having a liquidity provider and negotiate on an atomic swap. And further, you can actually further trade-off, or exchange, liquidity for UX, which is probably the best-case scenario. Cause now you went from initially a time problem with which is non-fungible to a money problem, which is at least fungible, now to a UX problem.

[00:50:09] This is for fungible assets, of course, as I said earlier, ZK rollups are unmatched when it comes to nonfungible assets or non-liquid in general. so the last thing you can do is, well, you know, what that people who are going to deposit into the rollup in general, they could be used essentially as liquidity providers. So you don't need to dedicate the liquidity provider, you can actually have people that are going to deposit anyways. So now you end up with a UX problem, which is fairly easy to solve. You just put some engineers and some designers behind it, matchup people doing withdrawals and deposits.

[00:50:39] And now you basically end up with a situation where anyone can withdraw their liquid funds, within minutes, at almost no cost. so I'm not really worried about that, that failure mode there, of not having enough incentivized actors running full nodes and being able to create a fraud-proof. To answer your question in like 10 seconds is the two biggest failure modes I'm concerned about is one, smart contract bug, which is again, why we're undergoing a final security audit and why we've taken so long to get to Mainnet, and why we're not there yet is because we want it to be very safe.

[00:51:08] We are not planning any upgrade mechanism for fuel to be one or possibly ever, which means that if there is more contract bug, then unfortunately if things go bad, but it means that we are fully decentralized, unlike a lot of protocols out there. the other thing I'm worried about, which I'll be short about, which will be short is if there is simply no one using the system whatsoever.

[00:51:28] I said there were a bunch of people that are incentivized to run full nodes, but that's assuming that people are actually using the system. One particular, scary failure mode is, what happens if the straight-up just no one is using the system? And I don't really know what will happen in the future.

[00:51:43] Hopefully we don't end up in that failure mode.

[00:51:45] James: [00:51:45] So really quick, you talked a lot about full nodes, about light clients, about how expensive it may be to run a full node on one of these rollups, and about user funds being locked. I know the thing that you are dancing around, but can you state it outright for the people listening? What happens to users' funds that lock them

[00:52:08] John: [00:52:08] Sure. One of the whole reasons that rollups put all the data on-chain is that in the worst case, everyone, all the block proposers, they just disappear. And then you, the user needs to be able to produce a new block in some way, it could be producing a new block. It could be putting a single state transition, but you need to produce a new block in some way.

[00:52:30] And generally to produce a new block, you need to know, you need to know what your clients are. you need to know a proof, for your clients. and this is especially relevant in the accounts data model. It's actually not so relevant the UTXO data model, ironically, but then the accounts data model is very important, and let's consider the case of ZK rollup, so we don't have to bother with, the complexity of the optimistic rollups in order to produce a new ZK rollup block, you need to make a proof that, like a Merkel, like you need to make a ZK proof that, as a witness, a Merkel branch to your account, it's a leaf in the account, the whole state tree.

[00:53:06] And you need to provide that as a witness. That's a private input into the proof. so if you don't have an up-to-date, Merkle proof of your account against the latest state of the ZK rollup, you can't spend those funds. What this means is in the worst case, if all the blocker proposers disappear, you must fully execute and fully apply every single state of transition from the Genesis of the role of chain to get the entire final state, which you can then use to produce a new block. And in my opinion, which appears isn't shared by everyone in the Ethereum community, you need metric systems based on their worst-case performance and not on their average or their best case for performance.

[00:53:51] James: [00:53:51] So the summary there is that users of a rollup need to either run a full node or trust that someone else out there will give them that state proof on demand.

[00:54:04] Is that fair?

[00:54:06] Okay. Getting back to Alex. Do you think that is a fair assessment of the exit strategy for a failed ZK rollup? What other failure cases are you worried about and how do you address them?

[00:54:19] Alex: [00:54:19] So firstly, I'll address this particular case. It's not correct to say that you need to run a full node. You just need access to an Ethereum archive note. So what you need to do is to be able to sync Ethereum, the full history of Ethereum blocks which contain the state transitions. And then you apply the state transitions to your state and recompute the hash, and the application of the state transitions actually takes less time than you need to download the full Ethereum archive.

[00:54:54] So by the time you download it, you sync to your full Ethereum node. You already reconstructed the state and you can immediately use it. And you only do it in rare cases. and I think as long as Ethereum itself relies on full archives and full nodes, for holding the previous state, it's safe to assume that this is a good way to exit. So you don't rely on anyone else

[00:55:18] James: [00:55:18] To, to check my understanding here, right, is when I want to exit this ZK rollup, I need to know what the current root hash is. So I need to have an Ethereum client of some kind, so I can know what it is on layer one. And then I need to have the current leaf, my leaf in the state tree that connects to that root hash.

[00:55:40] So I need to have some sort of access to the rollups state. So in each of those two cases, I need to either be running it myself, or I need to be talking to some, trusted, or very low trust third party to get that right.

[00:55:55] Alex: [00:55:55] To a trustless third party, because he will verify that they gave you the correct information by just matching the Merkel, paths But you can just reconstruct it from the Ethereum blocks,

[00:56:06] James: [00:56:06] Right. As long as I have an Ethereum node.

[00:56:09] Alex: [00:56:09] Exactly. But for you to download and execute all, like sync the full Ethereum node with turbo gas, I think it took something like one week.

[00:56:19]And then if users are affected in some rollup, I imagine that someone will do this and then just share a [inaudible] file, with the latest state, which is going to be small. So we can say exactly like we have 1 billion accounts. In a ZK rollup, then the full state with the hashes of other users will be something like just a couple of gigabytes.

[00:56:46] So it's 14 gigabytes. So it's not that much. It's like a

[00:56:52] James: [00:56:52] So it's this like ... small, additional assumptions that you make when you access Ethereum, right, is you need the Ethereum state somehow and you need the ZK rollup state somehow. It's usually not very difficult to get those things. I think a lot of us use Metamask in the browser and to do that automatically anyway, but it is like an additional little wrinkle in the users' security assumptions.

[00:57:17] Alex: [00:57:17] I would say it's not an additional assumption. It's the assumption of the safety of the ZK rollup is that Ethereum is safe. The assumption is that your funds are going to be as safe as though they are in Ethereum. Now, if you would just operate a light client in Ethereum, you might be declined access to the latest state of your Ethereum funds.

[00:57:37] And then you won't be able to do anything. In order for you to be able to access Ethereum, you rely on someone to operate full nodes. And you rely on the state being publicly available. So

[00:57:47] James: [00:57:47] Great.

[00:57:49] Okay. Sorry to interrupt, please continue. What other failure cases are you worried about and how do you address those?

[00:57:56] Alex: [00:57:56] As I said before, in ZK rollups, you can only fail if you implement your contracts, in the wrong way, and you have bugs and someone exploits those bugs, or if you have bugs in your zero-knowledge circuits, or in cryptography, which is essentially the same thing. So if you have bugs in your protocol, So this is, of course, an area of possibility.

[00:58:18] So you have to spend a lot of effort on the design, testing, audits. Simplicity in design is especially really important. Making code readable, making it logically structured, all of any other protocol.

[00:58:35] James: [00:58:36] Are you at all worried about, like validators disappearing? What happens to users, if nobody is posting new blocks?

[00:58:44] Alex: [00:58:44] So then the users in the current version of the ZKSync in ZKSync 1.0, you would have to do the full exit, where you get the rollup state off-chain from the blocks. Then you construct your Merkel proof for your account and you go on-chain and make a withdrawal, which might be comparable to a mass exit problem, except that you don't have any deadlines.

[00:59:09] You can do it any time, but you still need to make a quick transaction. On layer-one, which is unpleasant. if you have a lot of users, millions of users, then we actually want to avoid that. Most users will create their accounts and live entirely layer-two. So we want to avoid a situation where all of them have to withdraw to layer one.

[00:59:31] That's why we're planning for ZKsync version 2, a mass migration mechanism where you will be able to have these enforced exits en masse, with all the users to some alternative version of a rollup. Should something happen to the validators of this one, and should it be unrecoverable for some reason.

[00:59:56] James: [00:59:56] Okay. Interesting. So I guess John's position here is that ZK rollups require an assumption that there's an honest node willing to give you the state of the chain and audience member Nick White asks, If that same node could as easily produce a fraud-proof, why do we prefer ZK proofs?

[01:00:21] Alex: [01:00:21] That that's,

[01:00:23] Yeah. The fact that you can produce a fraud-proof, doesn't mean that you will produce fraud-proof. And it doesn't mean that your fraud-proof is going to be included. We are just back to the discussion about the security of the rollups. But there is no assumption in ZK rollup that someone will give you the state.

[01:00:41] You can always go and get the state from Ethereum. That's why it's called the rollup and not the Validium. In the Validium, which he probably refers to, indeed, the state is held off-chain. So Validium is something that can be understood as a ZK plasma. Your state is completely off-chain. You don't share it through the Ethereum network, some full nodes in Ethereum do not have an imprint of it.

[01:01:07] And then yes, you rely on someone to give you this data. And if they don't give you this data, there your funds will be frozen. But that's not ZK rollup. That's Validium.

[01:01:17] James: [01:01:17] For context, are there any Validiums running on-chain today? Any names that people would recognize?

[01:01:23]Alex: [01:01:23] Sure. I think, diversify runs it with [inaudible]. And this is a validium system.

[01:01:28] James: [01:01:28] Oh, so that would be the starchitect system.

[01:01:31] Alex: [01:01:31] StartX. Yes. I think the newer version of StartX supports ZK rollup mode as well. But I think the current deployment was in validium.

[01:01:41] James: [01:01:41] And,

[01:01:43] Alex: [01:01:43] That's the case,

[01:01:44] James: [01:01:44] And would you say the substantive difference there is where the data is made available?

[01:01:49] Alex: [01:01:49] whether the data is made available through Ethereum cold data. So whether the data is broadcast through the Ethereum network as a part of Ethereum blocks.

[01:02:00]James: [01:02:00] okay. is there a, so if Validium corresponds to ZK rollups, loosely speaking, they use validity proofs on some set of data, but the data is published in different places. Is there a system tha

t corresponds to optimistic rollups in the same way? Yes it's

[01:02:20] Yes, it's

[01:02:20] Alex: [01:02:20] Plasma.

[01:02:20] Okay. Interesting.

[01:02:21] Richard: [01:02:21] So there's a comment from @lastMJS. So he says it's not really a debate as far as I've observed. I'm pretty sure most people agree that ZK is the end goal and optimistic rollups are a good, short, medium-term solution. What are you guys' thoughts on that? Maybe starting with Alex.

[01:02:38] Alex: [01:02:38] I definitely agree with the statement. but as I said earlier in the conversation, I'm afraid that optimistic rollups will be launched already as an obsolete solution. Because ZK rollups have a number of advantages, which we discussed, most importantly security, but also this usability and capital-efficient exits.

[01:02:59] Because if you have to, to use external liquidity providers, it's quite expensive and you probably can do it to move large fractions of your rollup. A lot of users will want to move the liquidity to a different system because they get better yields there or whatnot, they won't be able to do it. But there is one overseen issue as well.

[01:03:22] Right now ZK rollups are already cheaper and they will always remain cheaper than optimistic rollups because the most expensive part of transactions in both systems is the on-chain data gas costs. ZK rollups just generally, for most transactions, need to put less data on-chain.

[01:03:45] So for example, if you want to maintain Ethereum compatibility with the same signature format, the same signature scheme, and you don't want to have this Trubit style, fraud proofs in multiple steps, then you actually need to put a lot of data for each transaction. So 65 bytes for Ethereum signature, plus one intermediate hash for the state after this transaction, plus the inputs, the full input of the transaction.

[01:04:16] Whereas in a ZK rollups system, you omit the signature entirely. Even if you use Ethereum compatible ECDSA. You can just omit it entirely. You omit the intermediate hash and you can also omit a lot of inputs in various contracts. So essentially you omit all the inputs, you only publish the outputs and whatever changes in the storage, which is going to be a lot less.

[01:04:41] And it's already a lot less in case of payments. And we will also be able to support smart contracts with solidity very soon. So potentially earlier or soon after optimistic rollups launch with this solution. So I just really don't see any benefits of optimistic rollups.

[01:05:01] James: [01:05:01] John, Alex has some pretty strong language there. He said, obsolete, no benefits. how would you respond to that?

[01:05:10] John: [01:05:10] Sure. I would say that, first of all, in terms of, ZK rollups being always cheaper, I would say that's both true and not true, in the sense that ZK rollups can be cheaper since you need, you can omit witness data, but it's not necessarily always true. If you have a system that just handles simple payments, for example, and you accept an interactive verification game because there's nothing inherently wrong. The guys at off-chain labs have built at [inaudible], which currently today is the first, EVM compatible. Rollup, not just optimistic rollup. That is currently out in Testnet and new, you know, if you want to build out solidity contracts and stuff and do it in a rollup, you don't have to wait for other chains.

[01:05:52] You can just use theirs right now. So they have a system that works perfectly fine with an interactive verification game. And if you were willing to accept that you don't need intermediate state roots, and you can compress witness data with their BLS signature. So in this one instance, so is not always the case ZK rollups are cheaper, in this one instance, you can construct an optimistic rollup that just handles payments, and it will be cheaper than a ZK rollup because the compressed BLS signature will be, both smaller, it'll be cheaper in general just to post it on-chain than it would be to both post and verify the zero-knowledge proof of the same state transitions in the ZK rollup. Where I think ZK rollups are particularly exciting is, where they can verify or ready to, prove, a large amount of computation that results in a small amount of state transitions.

[01:06:36] So in terms of, whether or not optimistic rollups are obsolete from the outset versus ZK rollups. I would say not necessarily because we can't necessarily put cryptographic assumptions aside. We've seen many cases of cryptography being broken, and the fewer cryptographic assumptions we make the better.

[01:06:56] This is not a small assumption that you can just put aside. There have been many suggestions for things like stark or potentially SNARK friendly hash functions. And I've seen a few being proposed throughout the years. And then, every few months then they'll say, Oh wait, actually the one where we proposed three months ago is actually broken with this attack.

[01:07:13] Here's a new one. We promise this one has no attack factor. And this is, this has happened several times throughout the past few years. Even something as simple as a hash function is difficult to get, building out, entire system with this novel cryptography is actually a strong assumption.

[01:07:28] And this could take a while. So in terms of, whether or not this is the end goal or not, as that Twitter user said, I would actually agree with him. I would agree with him and say, ZK rollups are the, not just ZK rollups, but validity proofs are the end goal, right? The problem is what does short to medium-term mean?

[01:07:46] And I think some people read that and say short to medium term means six months, but optimistic rollups will be obsolete in six months. but what if that's 20 years, right? It was 20 years then that is in the grand scheme of things, a short period of time. And if we have fully functional zero-knowledge proofs that can do all this amazing stuff 20 years from now, that's actually going to be an amazing accomplishment. and then it will be a short amount of time, but that's not today.

[01:08:10] James: [01:08:10] So is your argument there that it is significantly easier to get the semantics of a fraud-proof correct than to get these semantics of a zero-knowledge proof? Correct?

[01:08:20] John: [01:08:20] Considering everything. Yes. By everything. It's not just writing some circuits, but it's also all the cryptographic assumptions and the proofs and math and stuff behind [inaudible], considering everything. Yes. I would say it's much easier to get fraud proofs, correct than validity proofs.

[01:08:36] James: [01:08:36] You mentioned, SNARK friendly hash functions specifically. Are there any other cryptographic primitives that you are worried about?

[01:08:43] John: [01:08:43] I'm not a hundred percent sure on what the latest and greatest in what people suggest to use for ZK proofs, but, the recursive Snarks, whatever construction is used for recursive Snarks, I believe that's novel. There's also some novel cryptography and That we don't want to have a trust to set up for Snarks.

[01:09:01] So I think there's, no trust is set up or, universal, trust is set up snarks, there's some novel cryptography there. there's also, I think there has been the use of different signature schemes. I don't remember which one, but I want to say EDDSA. And I've been told that EDDSA is substantially better than ECDSA.

[01:09:21] Because ECDSA is garbage. Of course, if you looked at a fairly recent Twitter thread, it turned out that EDDSA has malleable signatures, or like the way you verify signatures and malleable. And basically, there's not a single implantation out there that actually conforms to the standard proposed by an RFC, which means that if any blockchain or any system that uses EDDSA, if they have two clients within different languages, this could result in consensus failure.

[01:09:48] So basically this shows the anti blockchain that has used EDDSA. They should not have done that. They jumped the gun because they heard, Oh, it has these nice properties, these amazing properties, so much better than the ECDSA. It turns out that was a terrible idea. And they only learned this later that various blockchains use EDDSA.

[01:10:04] They've been using it for a couple of years. None of them should have used it, because the verification is malleable.

[01:10:09] James: [01:10:09] I'm aware of blockchains that have been using EDDSA for at least five years. But ZK sync, as far as I know, does not use EDDSA. Your argument there, I think is more general. That cryptography needs to be thoroughly vetted over many years.

[01:10:25] Do you have a lower threshold?

[01:10:27] What's the minimum amount of vetting time for you?

[01:10:30] John: [01:10:30] Oh, I would say a decade. It's on the order of decades.

[01:10:34] James: [01:10:34] You're not a fan of Keccak.

[01:10:36] John: [01:10:36] No, I hate Keccak. SHA-3 is, they're all terrible. They're all terrible, but no, I'm not a fan of any of the new hash functions. it's like one of those things, if you put a gun to my head and told me to the side, which hashes function would you use, if you could only use one of them, maybe I would pick SHA-3 because the standard, but they're all terrible.

[01:10:56] James: [01:10:56] I don't think anyone has ever threatened me to make me choose between cryptographic primitives. I want to give Alex a chance to talk about this a little more. Alex, what new primitives are you guys using? What new constructions. And do you have reason to believe that they're safe to use?

[01:11:15] Alex: [01:11:15] Absolutely. As the newer cryptographic primitives were mentioned, which are used by Ethereum already. it's clear that it's not about some abstract considerations, but, you cannot be better than the base system you rely on. And Ethereum is going to use BLS signatures in ETH 2, very soon, plonk actually uses cryptographic assumptions, which are very similar to the PLS signatures we use, our cryptography team would be better able to talk about this than me, but, the cryptographic assumptions are definitely concerned. So we only use things which have been known for a while and that have been thoroughly audited. And we do not use any esoteric assumptions, such as knowledge exponent.

[01:12:02] So we rely on a very conservative cryptographic assumption itself. Now, whenever someone says that some primitive is broken. What this means in practice is that there is a theoretical way to exploit it. you can have some bias in the hash function, so it's not going to be entirely random, or that under some circumstances, you can increase the brute force attack efficiency on these cryptographic parameters, but that means that you will be able to break things, that, at the much lower security margins than those used in actual production systems. So by the moment. Yeah, the academic community of the cryptographic community, discovered some vulnerability.

[01:12:52] You still have plenty of time to replace these primitives with something better, because from the time of discovery of potential vulnerabilities to the time of actual attacks that actually takes years to be able to exploit them. So I would love to be worried about that. I would be worried generally about any complex systems, including ZK and optimistic rollups, where people just put smart contracts on Ethereum, plain, smart contracts without proper audit or even with an audit. When people go and rush to deploy large amounts of funds into very new systems, I would rather take a gradual approach and let the systems grow naturally over time with, with capital, being exposed to the world, the potential attackers, and see that nothing happens for a while. Then he can be sure that the systems work. So, I think this is true for ETH 2, for proof of stake, for sharding as well as for both families of the rollups and, of course, you have some hard limits, which when you hit them, then you're in trouble, like this the maximum amount of funds, which you can securely place in an optimistic rollup. But apart from that, all systems undergo this adoption.

[01:14:14] James: [01:14:14] So would you say that there is not significantly more risk from new cryptography than you get anyway in the solidity implementation of your contracts and system?

[01:14:25] Alex: [01:14:25] Oh, yes, absolutely. So the cryptography we're using is very well understood. So we're going through audits and we're talking to people and there is a growing body of cryptographers who can understand these protocols very well. And they are built off atomic blocks and then these blocks are being reused in composable ways.

[01:14:45] So you have your basic assumptions, which have been validated in other production systems for years, and then they are being applied here. And I'm absolutely not worried about this particular part as being something of an extra threat. I would be more worried about just making mistakes

[01:15:07] James: [01:15:07] Interesting. So you are more worried about the solidity implementation and about the validators, like uptime and reliability than you are about the cryptography involved.